MNC

- Introduction

- Related Work

- Multi-task Network Cascades

- End-to-End Training

- Cascades with More Stages

- Implementation Details

- Experiments

- Conclusion

(CVPR 2016) Instance-aware Semantic Segmentation via Multi-task Network Cascades

Paper: https://arxiv.org/abs/1512.04412

Code: https://github.com/daijifeng001/MNC

we present Multitask Network Cascades for instance-aware semantic segmentation. Our model consists of three networks, respectively differentiating instances, estimating masks, and categorizing objects. Our solution is a clean, single-step training framework and can be generalized to cascades that have more stages.

Introduction

Problems: Current methods all require mask proposal methods that are slow at inference time.

Instance-aware semantic segmentation task can be decomposed into three different and related sub-tasks:

Differentiating instances. In this sub-task, the instances can be represented by bounding boxes that are class-agnostic.

Estimating masks. In this sub-task, a pixel-level mask is predicted for each instance.

Categorizing objects. In this sub-task, the category-wise label is predicted for each mask-level instance.

Multi-task Network Cascades (MNCs)

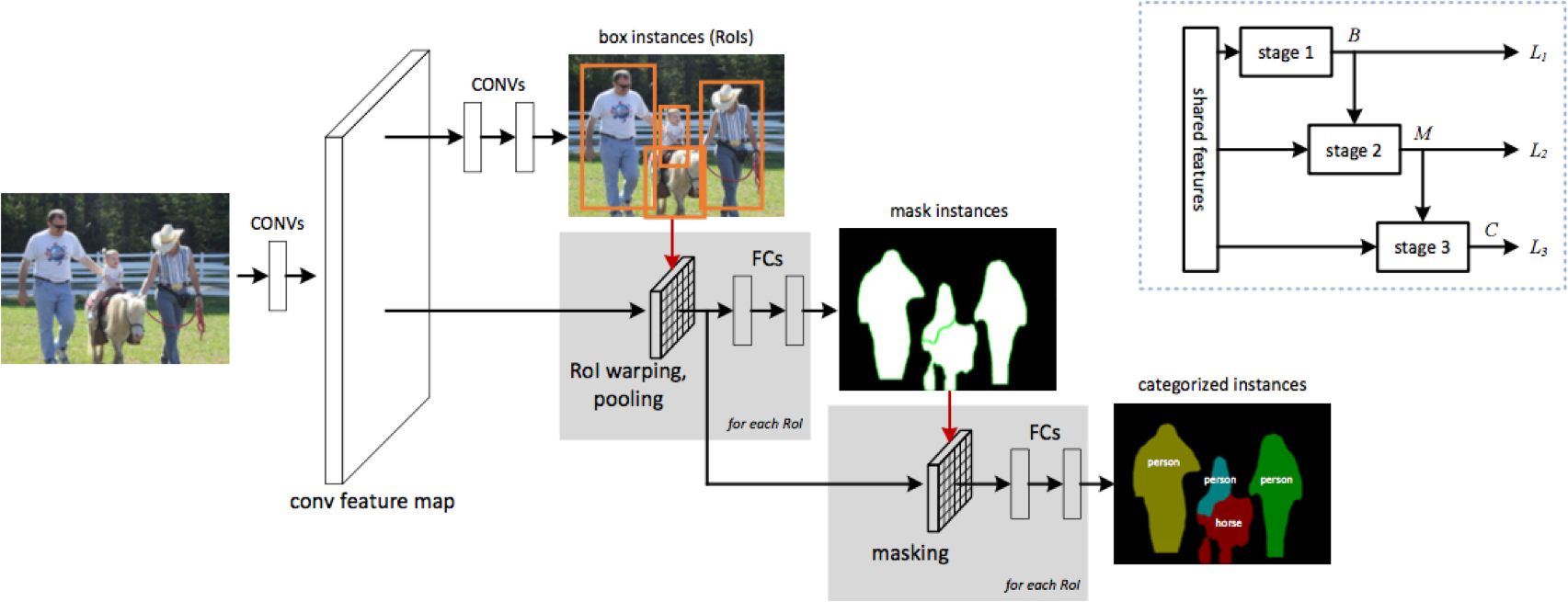

Our network cascades have three stages, each of which addresses one sub-task. The three stages share their features.

Feature sharing greatly reduces the test-time computation, and may also improve feature learning thanks to the underlying commonality among the tasks.

To achieve theoretically valid back-propagation, we develop a layer that is differentiable with respect to the spatial coordinates, so the gradient terms can be computed.

Related Work

Object detection methods [10, 15, 9, 26] involve predicting object bounding boxes and categories.

Using mask-level region proposals, instance-aware semantic segmentation can be addressed based on the R-CNN philosophy, as in R-CNN [10], SDS [13], and Hypercolumn [14]. a bottleneck at inference time. Its accuracy for instance-aware semantic segmentation is yet to be evaluated.

Category-wise semantic segmentation is elegantly tackled by end-to-end training FCNs [23]. not able to distinguish instances of the same category.

Multi-task Network Cascades

Input: the network takes an image of arbitrary size

Output: instance-aware semantic segmentation results

The cascade has three stages:

proposing box-level instances

regressing mask-level instances

categorizing each instance

Each stage involves a loss term, but a later stage’s loss relies on the output of an earlier stage, so the three loss terms are not independent.

We train the entire network cascade end-to-end with a unified loss function.

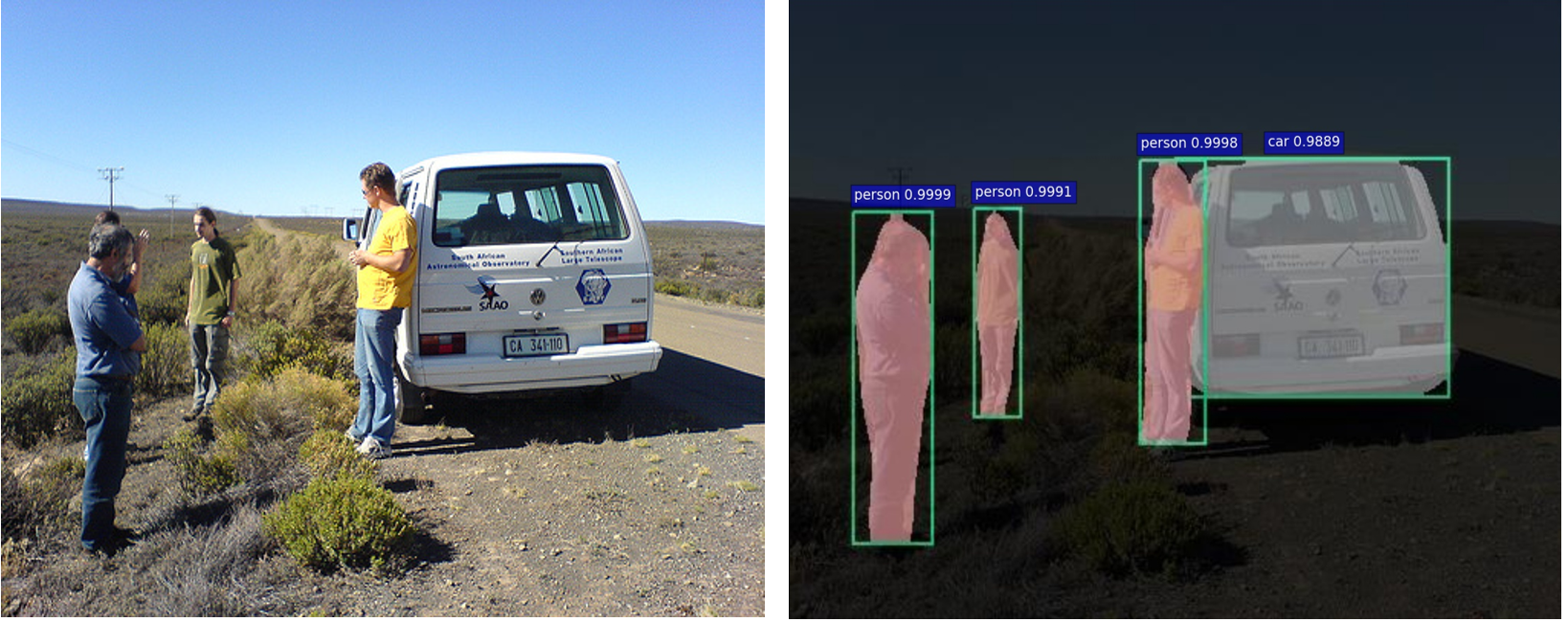

Figure 2. Multi-task Network Cascades for instance-aware semantic segmentation. At the top right corner is a simplified illustration.

Regressing Box-level Instances

The network structure and loss function of this stage follow the work of Region Proposal Networks (RPNs).

An RPN predicts bounding box locations and objectness scores in a fully-convolutional form.

We use the RPN loss function given in [26]. This loss function serves as the loss term L1 of our stage 1. It has a form of:

\[L_1 = L_1(B(\Theta))\]

Here \(\Theta\) represents all network parameters to be optimized, \(B\) is the network output of this stage, representing a list of boxes.

Regressing Mask-level Instances

Input: the shared convolutional features and stage-1 boxes.

Output: a pixel-level segmentation mask for each box proposal.

Given a box predicted by stage 1, we extract a feature of this box by Region-of-Interest (RoI) pooling [15, 9]. The purpose of RoI pooling is for producing a fixed-size feature from an arbitrary box.

We append two extra fully-connected (fc) layers to this feature for each box. The first fc layer (with ReLU) reduces the dimension to 256, followed by the second fc layer that regresses a pixel-wise mask.

the loss term \(L_2\) of stage 2 for regressing masks exhibits the following form:

\[L_2 = L_2(M(\Theta) \mid B(\Theta))\]

Here \(M\) is the network outputs of this stage, representing a list of masks.

Categorizing Instances

Input: the shared convolutional features, stage-1 boxes, and stage-2 masks.

Output: category scores for each instance.

mask-based pathway: (inspired by the feature masking strategy in [7])

\[\mathcal{F}_i^{Mask}(\Theta) = \mathcal{F}_i^{RoI}(\Theta) \cdot M_i(\Theta)\]

box-based pathway: the RoI pooled features directly fed into two 4096-d fc layers.

The mask-based and box-based pathways are concatenated. On top of the concatenation, a softmax classifier of N +1 ways is used for predicting N categories plus one background category.

mask-based pathway: focused on the foreground of the prediction mask.

box-based pathway: may address the cases when the feature is mostly masked out by the mask-level pathway (e.g., on background).

The loss term \(L_3\) of stage 3 exhibits the following form:

\[L_3 = L_3(C(\Theta) \mid B(\Theta), M(\Theta))\]

Here C is the network outputs of this stage, representing a list of category predictions for all instances.

End-to-End Training

We define the loss function of the entire cascade as:

\[L(\Theta) = L_1(B(\Theta)) + L_2(M(\Theta) \mid B(\Theta)) + L_3(C(\Theta) \mid B(\Theta), M(\Theta))\]

This loss function is unlike traditional multi-task learning, because the loss term of a later stage depends on the output of the earlier ones.

The main technical challenge: spatial transform of a predicted box \(B_i(\Theta)\) that determines RoI pooling.

we develop a differentiable RoI warping layer to account for the gradient w.r.t. predicted box positions and address the dependency on \(B(\Theta)\). The dependency on \(M(\Theta)\) is also tackled accordingly.

- Differentiable RoI Warping Layers

we perform RoI pooling by a differentiable RoI warping layer followed by standard max pooling.

The RoI warping layer crops a feature map region and warps it into a target size by interpolation.

\[\mathcal{F}_i^{RoI}(\Theta) = G(B_i(\Theta)) \mathcal{F}(\Theta)\]

\[\frac{\partial L_2}{\partial B_i} = \frac{\partial L_2}{\partial \mathcal{F}_i^{RoI}} \frac{\partial G}{\partial B_i} \mathcal{F}\]

Here \(\mathcal{F}(\Theta)\) is reshaped as an n-dimensional vector, with \(n = WH\) for a full-image feature map of a spatial size \(W \times H\). \(G\) represents the cropping and warping operations, and is an \(n'\)-by-\(n\) matrix where \(n' = W'H'\) corresponds to the pre-defined RoI warping output resolution \(W' \times H'\). \(\mathcal{F}_i^{RoI}(\Theta)\) is an \(n'\)-dimensional vector representing the RoI warping output.

Cascades with More Stages

Next we extend the cascade model to more stages within the above MNC framework.

The new stages 4 and 5 share the same structures as stages 2 and 3, except that they use the regressed boxes from stage 3 as the new proposals.

Implementation Details

We use the ImageNet pre-trained models (e.g., VGG-16 [27]) to initialize the shared convolutional layers and the corresponding 4096-d fc layers. The extra layers are initialized randomly as in [17].

We do not adopt multi-scale training/testing [15, 9], as it provides no good trade-off on speed vs. accuracy [9].

We use 5-stage inference for both 3-stage and 5-stage trained structures.

We evaluate the mean Average Precision, which is referred to as mean \(AP^r\) [13] or simply \(mAP^r\).

Experiments

Experiments on PASCAL VOC 2012

Experiments on MS COCO Segmentation

This dataset consists of 80 object categories for instance-aware semantic segmentation.

On our baseline result, we further adopt global context modeling and multi-scale testing as in [16], and ensembling.

Our final result on the test-challenge set is 28.2%/51.5%, which won the 1st place in the COCO segmentation track3 of ILSVRC & COCO 2015 competitions.