CGAN

Conditional Generative Adversarial Nets

Paper: https://arxiv.org/abs/1411.1784

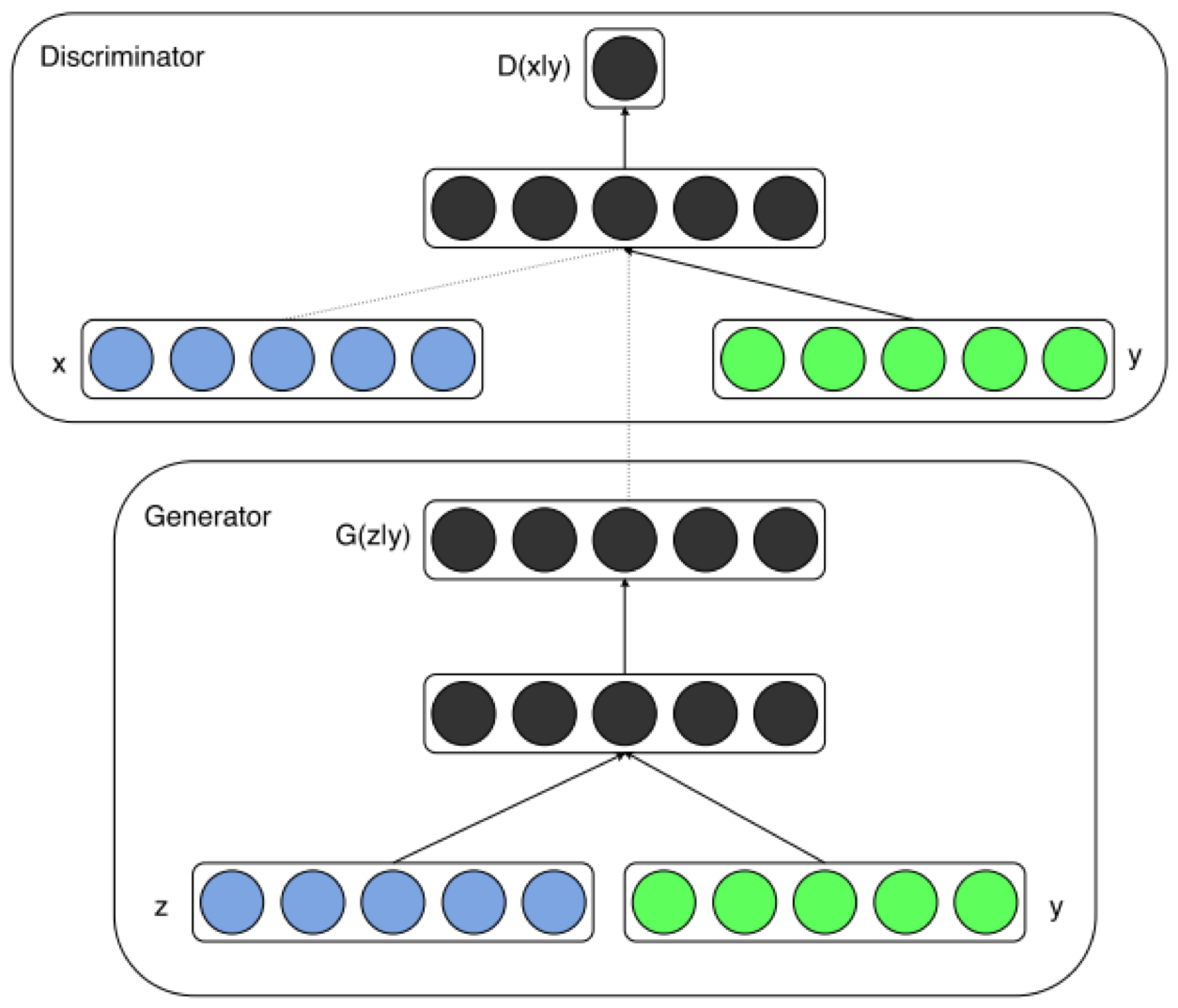

we introduce the conditional version of generative adversarial nets, which can be constructed by simply feeding the data, y, we wish to condition on to both the generator and discriminator.

We show that this model can generate MNIST digits conditioned on class labels.

We also illustrate how this model could be used to learn a multi-modal model, and provide preliminary examples of an application to image tagging in which we demonstrate how this approach can generate descriptive tags which are not part of training labels.

Introduction

by conditioning the model on additional information it is possible to direct the data generation process.

Related Work

Multi-modal Learning For Image Labelling

Conditional Adversarial Nets

Generative Adversarial Nets

Conditional Adversarial Nets

Generative adversarial nets can be extended to a conditional model if both the generator and discriminator are conditioned on some extra information \(y\).

The objective function of a two-player minimax game would be as

\[\min_G \max_D V(D,G) = \mathbb{E}_{x \sim p_{data}(x)} [\log D(x \mid y)] + \mathbb{E}_{z \sim p_z(z)} [\log (1 - D(G(z \mid y)))]\]

Experimental Results

Unimodal

We trained a conditional adversarial net on MNIST images conditioned on their class labels, encoded

as one-hot vectors.