CoGAN

- Introduction

- Generative Adversarial Network

- Coupled Generative Adversarial Network

- Experiments

- Applications

- Related Work

- Conclusion

(NIPS 2016) Coupled Generative Adversarial Networks

Paper: https://arxiv.org/abs/1606.07536

Code: https://github.com/mingyuliutw/CoGAN

Introduction

However, building a dataset with the correspondence images is often difficult.

we propose the coupled generative adversarial network (CoGAN) framework, which can learn a joint distribution for generating pairs of corresponding images in two domains without existence of corresponding images in the two domains in the training set.

We show that by enforcing a simple weight-sharing constraint, the CoGAN learns to capture the correspondence in the two domains in an unsupervised fashion.

Generative Adversarial Network

The objective of the generative model is to synthesize images resembling real images, while the objective of the discriminative model is to distinguish real images from synthesized ones.

Coupled Generative Adversarial Network

We note that the framework can be easily extended to generating corresponding images in multiple domains by adding more GANs.

The bottom layers decode high-level semantics and the top layers decode low-level details.

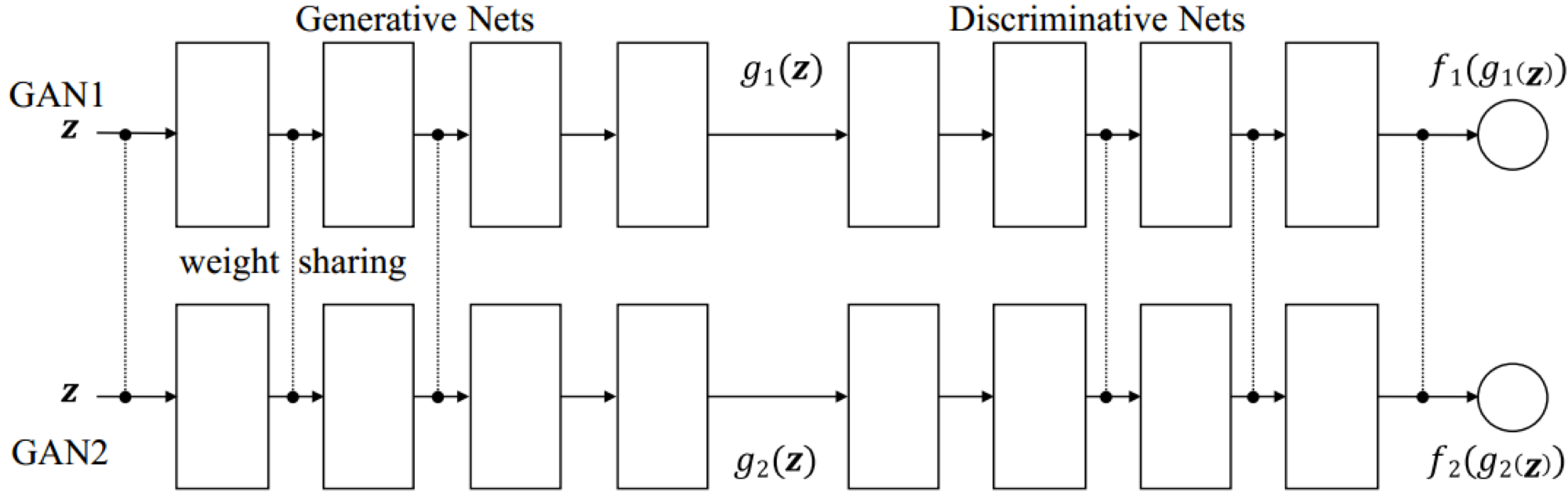

Let \(g_1\) and \(g_2\) be the generative models of GAN1 and GAN2.

we force the bottom layers of \(g_1\) and \(g_2\) to have identical structure and share the weights.

Let \(f_1\) and \(f_2\) be the discriminative models of GAN1 and GAN2.

Figure 1: The proposed CoGAN framework consists of a pair of generative adversarial nets (GAN): GAN1 and GAN2. Each has a generative model that can synthesize realistic images in one domain and a discriminative model that can classify whether an input image is a real image or a synthesized image in the domain. We tie the weights of the first few layers (responsible for decoding high-level semantics) of the generative models, \(g_1\) and \(g_2\). We also tie the weights of the last few layers (responsible for encoding high-level semantics) of the discriminative models, \(f_1\) and \(f_2\). This weight-sharing constraint forces the GAN1 and GAN2 to learn to synthesize pairs of corresponding images in the two domains, where the correspondence is defined in the sense that the two images share the same high-level abstraction but have different low-level realizations.

we force \(f_1\) and \(f_2\) to have the same top layers, which is achieved by sharing the weights of the top layers.

the adversarial training only encourages the generated pair of images to be individually resembling to the images in the respective domains.

Experiments

Digit Pair Generation

Weight Sharing

Comparison with Conditional Generative Models

Pair Face Generation

RGB and Depth Image Generation

Applications

Unsupervised Domain Adaptation (UDA)

Cross-domain Image Transformation