BoxSup

(ICCV 2015) BoxSup: Exploiting Bounding Boxes to Supervise Convolutional Networks for Semantic Segmentation

Paper: http://www.cv-foundation.org/openaccess/content_iccv_2015/papers/Dai_BoxSup_Exploiting_Bounding_ICCV_2015_paper.pdf

提出生成区域proposal和训练CNN交替进行的方法,使用bounding box来部分代替mask进行图像语义分割的训练。

对只有bounding box ground-truth的样本,用Multiscale Combinatorial Grouping (MCG)生成分割mask的候选,并优化label选一个与bbox平均交集最大的mask作为监督信息。

对只有segmentation ground-truth的样本,直接更新FCN的参数。

Such pixel-accurate supervision demands expensive labeling effort and limits the performance of deep networks that usually benefit from more training data.

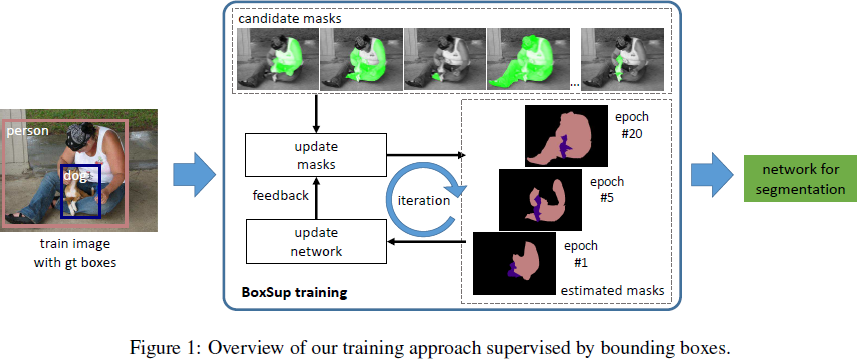

we propose a method that achieves competitive accuracy but only requires easily obtained bounding box annotations. The basic idea is to iterate between automatically generating region proposals and training convolutional networks.

Introduction

pixel-level mask annotations are time-consuming, frustrating, and in the end commercially expensive to obtain.

Though these box-level annotations are less precise than pixel-level masks, their amount may help improve training deep networks for semantic segmentation.

In addition, current leading approaches have not fully utilized the detailed pixel-level annotations.

In this work, we investigate bounding box annotations as an alternative or extra source of supervision to train convolutional networks for semantic segmentation.

We resort to region proposal methods [4, 33, 2] to generate candidate segmentation masks.

The convolutional network is trained under the supervision of these approximate masks.

We extensively evaluate our method, called “BoxSup”, on the PASCAL segmentation benchmarks [9, 26].

we may save expensive labeling effort by using bounding box annotations dominantly.

Our error analysis reveals that a BoxSup model trained with a large set of boxes effectively increases the object recognition accuracy (the accuracy in the middle of an object), and its improvement on object boundaries is secondary.

Related Work

Baseline

we adopt our implementation of FCN refined by CRF [6] as the mask-supervised baseline.

Approach

Segment Proposals for Supervised Training

To harness the bounding boxes annotations, it is desired to estimate segmentation masks from them.

We propose to generate a set of candidate segments using region proposal methods (e.g., Selective Search [33]) due to their nice properties:

region proposal methods have high recall rates [2] of having a good candidate in the proposal pool.

region proposal methods generate candidates of greater variance, which provide a kind of data augmentation [20] for network training.

the region proposal is only used for networking training.

Formulation

As a pre-processing, we use a region proposal method to generate segmentation masks.

We adopt Multiscale Combinatorial Grouping (MCG) [2] by default

The proposal candidate masks are fixed throughout the training procedure. But during training, each candidate mask will be assigned a label which can be a semantic category or background. The labels assigned to the masks will be updated.

With a ground-truth bounding box annotation, we expect it to pick out a candidate mask that overlaps the box as much as possible

\[\varepsilon_o = \frac{1}{N} \sum_S (1 - IoU(B, S)) \delta(l_B, l_S)\]

With the candidate masks and their estimated semantic labels, we can supervise the deep convolutional network as in Eqn.(1).

\[\varepsilon_r = \sum_p e(X_\theta(p), l_S(p))\]

We minimize an objective function that combines the above two terms:

\[\min_{θ,\{l_S\}} \sum_i (\varepsilon_o + \lambda \varepsilon_r)\]

Here the summation \(\sum_i\) runs over all training images, and \(\lambda = 3\) is a fixed weighting parameter.

Training Algorithm

Next we propose a greedy iterative solution to find a local optimum.

we only consider the case in which one ground-truth bounding box can “activate” (i.e., assign a non-background label to) one and only one candidate.

the optimization procedure may be trapped in poor local optima.

we further adopt a random sampling method to select the candidate segment for each ground-truth bounding box. Instead of selecting the single segment with the smallest cost \(E_o + \lambda E_r\), we randomly sample a segment from the first \(k\) segments with the smallest costs.

With the semantic labeling \(\{l_S\}\) of all candidate segments fixed, we update the network parameters \(\theta\). In this case, the problem becomes the FCN problem.

We iteratively perform the above two steps, fixing one set of variables and solving for the other set. For each iteration, we update the network parameters using one training epoch (i.e., all training images are visited once), and after that we update the segment labeling of all images.

The labeling \(l(p)\) is given by candidate proposals as above if a sample only has ground-truth boxes, and is simply assigned as the true label if a sample has ground-truth masks.

Experiments

Experiments on PASCAL VOC 2012

Comparisons of Supervision Strategies

This indicates that in practice we can avoid the expensive mask labeling effort by using only bounding boxes, with small accuracy loss.

This means that we can greatly reduce the labeling effort by dominantly using bounding box annotations.

Error Analysis

The error in semantic segmentation can be roughly thought of as two types:

recognition error that is due to confusions of recognizing object categories

boundary error that is due to misalignments of pixel-level labels on object boundaries.

To analyze the error, we separately evaluate the performance on the boundary regions and interior regions.

correctly recognizing the interior may also help improve the boundaries (e.g., due to the CRF post-processing).

So the improvement of the extra boxes on the boundary regions is secondary.

Comparisons of Estimated Masks for Supervision

This indicates the importance of the mask quality for supervision.

Comparisons of Region Proposals

our method effectively makes use of the higher quality segmentation masks to train a better network.

Comparisons on the Test Set

Exploiting Boxes in PASCAL VOC 2007

Baseline Improvement

To show the potential of our BoxSup method in parallel with improvements on the baseline, we use a simple test-time augmentation to boost our results.

Experiments on PASCAL-CONTEXT

To train a BoxSup model for this dataset, we first use the box annotations from all 80 object categories in the COCO dataset to train the FCN (using VGG-16).

Conclusion

The proposed BoxSup method can effectively harness bounding box annotations to train deep networks for semantic segmentation.