Simple Does It

- Introduction

- Related work

- From boxes to semantic labels

- Semantic labelling results

- From boxes to instance segmentation

- Instance segmentation results

- Conclusion

(CVPR 2017) Simple Does It: Weakly Supervised Instance and Semantic Segmentation

Paper: https://arxiv.org/abs/1603.07485

Project Page: https://www.mpi-inf.mpg.de/departments/computer-vision-and-multimodal-computing/research/weakly-supervised-learning/simple-does-it-weakly-supervised-instance-and-semantic-segmentation/

提出递归训练、合理使用GrabCut之类的算法来弱监督实例级的语义分割。

\(Box\):递归训练,初始时使用整个bbox作为分割ground-truth,训练完一轮后加上3个后处理(去掉不在bbox内的预测,恢复IoU过小的bbox,使用DenseCRF过滤)。

\(Box^i\):同Box,除了初始时使用bbox中心20%区域作为分割ground-truth。

\(GrabCut+/GrabCut+^i\):同\(Box/Box^i\),初始时使用修改版GrabCut的结果/多个GrabCut+超过一定阈值的结果。

\(MCG \cap GrabCut+\):同GrabCut+,初始时使用MCG和GrabCut+同时标为前景的像素作为ground-truth。

Introduction

one of their main weaknesses is that they need a large number of training samples for high quality results.

contributions:

We explore recursive training of convnets for weakly supervised semantic labelling, discuss how to reach good quality results, and what are the limitations of the approach.

We show that state of the art quality can be reached when properly employing GrabCut-like algorithms to generate training labels from given bounding boxes, instead of modifying the segmentation convnet training procedure.

We report the best known results when training using bounding boxes only, both using Pascal VOC12 and VOC12+COCO training data, reaching comparable quality with the fully supervised regime.

We are the first to show that similar results can be achieved for the weakly supervised instance segmentation task.

Related work

Semantic labelling

Weakly supervised semantic labelling

Instance segmentation

From boxes to semantic labels

There are two sources of information: the annotated boxes and priors about the objects.

We integrate these in the following cues:

C1 Background. Since the bounding boxes are expected to be exhaustive, any pixel not covered by a box is labelled as background.

C2 Object extend. The box annotations bound the extent of each instance. Assuming a prior on the objects shapes (e.g. oval-shaped objects are more likely than thin bar or full rectangular objects), the box also gives information on the expected object area. We employ this size information during training.

C3 Objectness. Two priors typically used are spatial continuity and having a contrasting boundary with the background. In general we can harness priors about object shape by using segment proposal techniques [35], which are designed to enumerate and rank plausible object shapes in an area of the image.

Box baselines

Given an annotated bounding box and its class label, we label all pixels inside the box with such given class. If two boxes overlap, we assume the smaller one is in front.

Recursive training

We name this recursive training approach \(Naive\).

The recursive training is enhanced by de-noising the convnet outputs using extra information from the annotated boxes and object priors. Between each round we improve the labels with three post-processing stages:

Any pixel outside the box annotations is reset to background label (cue C1).

If the area of a segment is too small compared to its corresponding bounding box (e.g. IoU < 50%), the

box area is reset to its initial label (fed in the first round). This enforces a minimal area (cue C2).As it is common practice among semantic labelling methods, we filter the output of the network to better respect the image boundaries. (We use DenseCRF [20] with the DeepLabv1 parameters [5]). In our weakly supervised scenario, boundary-aware filtering is particularly useful to improve objects delineation (cue C3).

The recursion and these three post-processing stages are crucial to reach good performance. We name this recursive training approach \(Box\), and show an example result in Figure 2.

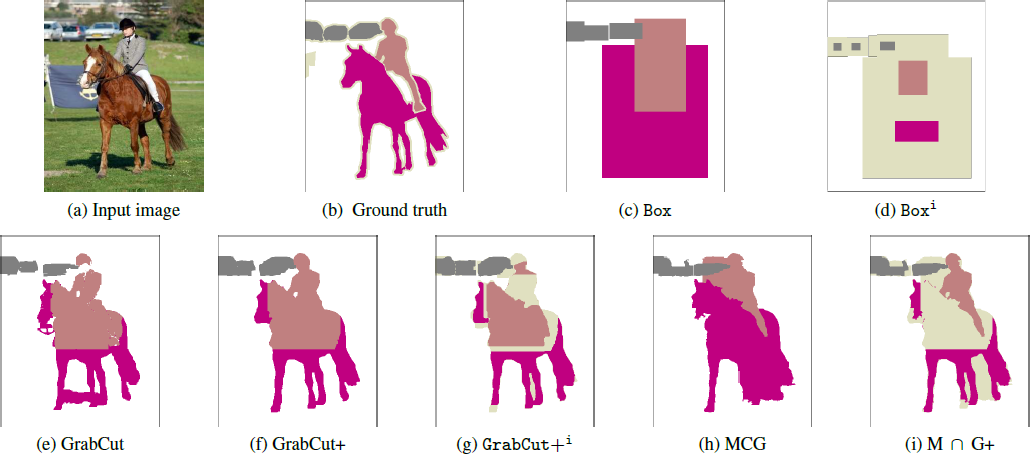

Figure 2: Example results of using only rectangle segments and recursive training (using convnet predictions as supervision for the next round).

Ignore regions

We also consider a second variant \(Box^i\) that, instead of using filled rectangles as initial labels, we fill in the 20% inner region, and leave the remaining inner area of the bounding box as ignore regions.

Following cues C2 and C3 (shape and spatial continuity priors), the 20% inner box region should have higher chances of overlapping with the corresponding object, reducing the noise in the generated input labels.

Box-driven segments

GrabCut baselines

We propose to use a modified version of GrabCut, which we call \(GrabCut+\), where HED boundaries [43] are used as pairwise term instead of the typical RGB colour difference.

Similar to \(Box^i\), we also consider a \(GrabCut+^i\) variant.

For each annotated box we generate multiple (∼ 150) perturbed GrabCut+ outputs. If 70% of the segments mark the pixel as foreground, the pixel is set to the box object class. If less than 20% of the segments mark the pixels as foreground, the pixel is set as background, otherwise it is marked as ignore. The perturbed outputs are generated by jittering the box coordinates (±5%) as well as the size of the outer background region considered by GrabCut (from 10% to 60%).

Adding objectness

As final stage the MCG algorithm includes a ranking based on a decision forest trained over the Pascal VOC 2012 dataset.

Given a box annotation, we pick the highest overlapping proposal as a corresponding segment.

Inside the annotated boxes, we mark as foreground pixels where both MCG and GrabCut+ agree; the remaining ones are marked as ignore. We denote this approach as \(MCG \cap GrabCut+\) or \(M \cap G+\) for short.

Figure 3: Example of the different segmentations obtained starting from a bounding box annotation. Grey/pink/magenta indicate different object classes, white is background, and ignore regions are beige. \(M \cap G+\) denotes \(MCG \cap GrabCut+\).

Semantic labelling results

Experimental setup

Datasets

Pascal VOC12 segmentation benchmark: 1464 training, 1449 validation, and 1456 test images. augmented

set of 10582 training images.

we use additional training images from the COCO [25] dataset. We only consider images that contain any of the 20 Pascal classes and (following [48]) only objects with a bounding box area larger than 200 pixels. After this filtering, 99 310 images remain (from training and validation sets), which are added to our training set.

Evaluation

We use the “comp6” evaluation protocol.

The performance is measured in terms of pixel intersectionover-union averaged across 21 classes (mIoU).

Implementation details

For all our experiments we use the DeepLab-LargeFOV network, using the same train and test parameters as [5].

We use a mini-batch of 30 images for SGD and initial learning rate of 0:001, which is divided by 10 after a 2k/20k iterations (for Pascal/COCO). At test time, we apply DenseCRF [20].

Main results

Box results

Box-driven segment results

| Method | val.mIoU |

|---|---|

| Fast-RCNN | 44.3 |

| GT Boxes | 62.2 |

| Box | 61.2 |

| Box^i | 62.7 |

| MCG | 62.6 |

| GrabCut+ | 63.4 |

| \(GrabCut+^i\) | 64.3 |

| \(M \cap G+\) | 65.7 |

| DeepLab_ours | 69.1 |

Table 1: Weakly supervised semantic labelling results for our baselines. Trained using Pascal VOC12 bounding boxes alone, validation set results. DeepLabours indicates our fully supervised result.

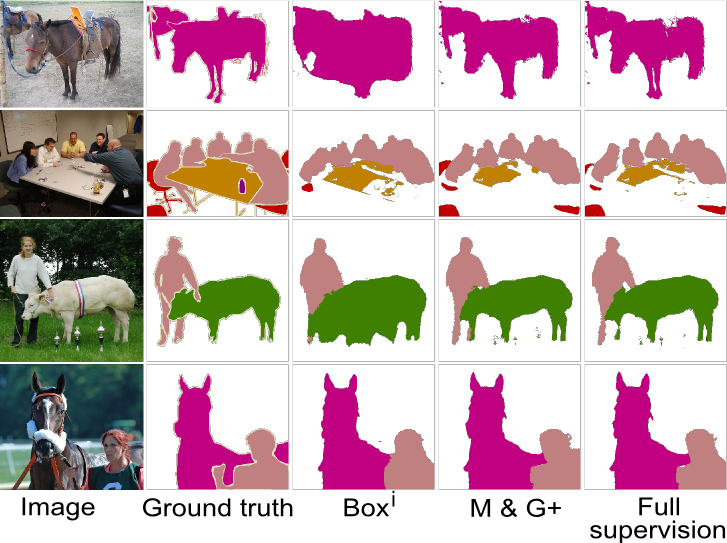

Figure 5: Qualitative results on VOC12. Visually, the results from our weakly supervised method \(M \cap G+\) are hardly distinguishable from the fully supervised ones.

Additional results

Boundaries supervision

Different convnet results

From boxes to instance segmentation

Instance segmentation results

Experimental setup

For our experiments we use a re-implementation of the DeepMask [33] architecture, and additionally we repurpose a DeepLabv2 VGG-16 network [6] for the instance segmentation task, which we name \(DeepLab_{BOX}\).

Baselines

Analysis

Conclusion

We showed that when carefully employing the available cues, recursive training using only rectangles as input can be surprisingly effective (\(Box^i\)). Even more, when using box-driven segmentation techniques and doing a good balance between accuracy and recall in the noisy training segments, we can reach state of the art performance without modifying the segmentation network training procedure (\(M \cap G+\)).

In future work we would like to explore co-segmentation ideas (treating the set of annotations as a whole), and consider even weaker forms of supervision.