Multiple Human Parsing

- Introduction

- Related work

- The MHP dataset

- Multiple-Human Parsing Methods

- Experiments

- Conclusion and future work

Towards Real World Human Parsing: Multiple-Human Parsing in the Wild

Paper: https://arxiv.org/pdf/1705.07206.pdf

提出多人语义分割数据集:4980张图片(训练/验证/测试:3000/1000/980),每张包含2-16人,18个语义标签。

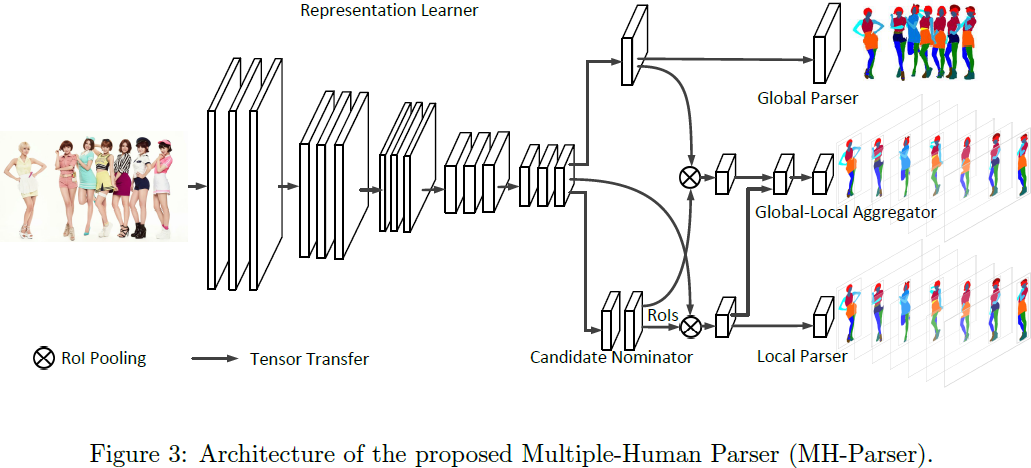

多人分割模型MH-Parser包含5个组件:

Representation learner (FCN提特征)

Global parser (用特征生成分割图)

Candidate nominator (RPN生成bbox)

Local parser (使用特征和bbox生成局部分割)

Global-local aggregator (结合全局和局部信息得到最终每个人的分割)

we introduce the Multiple-Human Parsing (MHP) dataset, which contains multiple persons in a real world scene per single image.

The MHP dataset contains various numbers of persons (from 2 to 16) per image with 18 semantic classes for each parsing annotation. Persons appearing in the MHP images present sufficient variations in pose, occlusion and interaction.

To tackle the multiple-human parsing problem, we also propose a novel Multiple-Human Parser (MH-Parser), which considers both the global context and local cues for each person in the parsing process.

Introduction

all the human parsing datasets only contain one person per image, while usually multiple persons appear simultaneously in a realistic scene.

Previous work on human parsing mainly focuses on the problem of parsing in controlled and simplified conditions.

simultaneous presence of multiple persons.

we tackle the problem of person detection and human parsing simultaneously so that both the global information and the local information are employed.

contributions:

We introduce the multiple-human parsing problem that extends the research scope of human parsing and matches real world scenarios better in various applications.

We construct a new large-scale benchmark, named Multiple-Human Parsing (MHP) dataset, to advance the development of relevant techniques.

We propose a novel MH-Parser model for multiple-human parsing, which integrates global context as well as local cues for human parsing and significantly outperforms the naive "detect-and-parse" approach.

Related work

Human parsing

Instance-aware object segmentation

The MHP dataset

this is the first large scale dataset focusing on multiple-human parsing.

4980 images, each image contains 2 to 16 humans, totally there are 14969 person level annotations.

Image collection and annotation methodology

we manually specify several underlying relationships (e.g., family, couple, team, etc.), and several possible scenes (e.g., sports, conferences, banquets, etc.)

The first task is manually counting the number of foreground persons and duplicating each image into several copies according to that number.

the second is to assign the fine-grained pixel-wise label for each instance.

Dataset statistics

training/validation/test: 3000/1000/980 (randomly choose)

The images in the MHP dataset contain diverse human numbers, appearances, viewpoints and relationships (see Figure 1).

Multiple-Human Parsing Methods

MH-Parser

The proposed MH-Parser has five components:

Representation learner

We use a trunk network to learn rich and discriminative representations. we preserve the spatial information of the image by employing fully convolutional neural networks.

images and annotations => representations

Global parser

capture the global information of the whole image. The global parser takes the representation from the representation learner and generates a semantic parsing map of the whole image.

representations => a semantic parsing map of the whole image

Candidate nominator

We use a candidate nominator to generate local regions of interest. The candidate nominator consists of a Region Proposal Network (RPN).

representations => candidate box

Local parser

give a fine-grained prediction of the semantic parsing labels for each person in the image.

representations, candidate box => semantic parsing labels for each person

Global-local aggregator

leverages both the global and local information when performing the parsing task of each person.

the hidden representations from both the local parser and the global parser => a set of semantic parsing predictions for each candidate box

Detect-and-parse baseline

In the detection stage, we use the representation learner and the candidate nominator as the detection model.

In the parsing stage, we use the representation learner and the local prediction as the parsing model.

Experiments

Performance evaluation

The goal of multiple-human parsing is to accurately detect the persons in one image and generate semantic category predictions for each pixel in the detected regions.

Mean average precision based on pixel (\(mAP^p\))

we adopt pixel-level IOU of different semantic categories on a person.

Percentage of correctly segmented body parts (PCP)

evaluate how well different semantic categories on a human are segmented.

Global Mean IOU

evaluates how well the overall parsing predictions match the overall global parsing labels.

Implementation details

representation learner

adopt a residual network [19] with 50 layers, contains all the layers in a standard residual network except the fully connected layers.

input: an image with the shorter side resized to 600 pixels and the longer side no larger than 1000 pixels

output: 1/16 of the spatial dimension of the input image

global parser

add a deconvolution layer after the representation learner.

output: a feature map with spatial dimension 1/8 of the input image

candidate nominator

use region proposal network (RPN) to generate region proposals.

output: region proposals

local parser

based on the region after Region of Interest (ROI) pooling from the representation learner and the size after pooling is 40.

global-local aggregator

the local part is from the hidden layer in the local parser, and the global part uses the feature after ROI pooling from the hidden layer of the global parser with the same pooled size.

The network is optimized with one image per batch and the optimizer used is Adam [20].

Experimental analysis

Overall performance evaluation

RL stands for the representation learner, G means the global parser, L denotes the local parser, A for aggregator.

Qualitative comparison

We can see that the MH-Parser captures more fine-grained details compared to the global parser, as some categories with a small number of pixels are accurately predicted.

Conclusion and future work

In this paper, we introduced the multiple-human parsing problem and a new large-scale MHP dataset for developing and evaluating multiple-human parsing models.

We also proposed a novel MH-Parser algorithm to address this new challenging problem and performed detailed evaluations of the proposed method with different baselines on the new benchmark dataset.