DSD

DSD: Regularizing Deep Neural Networks with Dense-Sparse-Dense Training Flow

Paper: https://arxiv.org/pdf/1607.04381v1.pdf

Model Zoo: https://songhan.github.io/DSD/

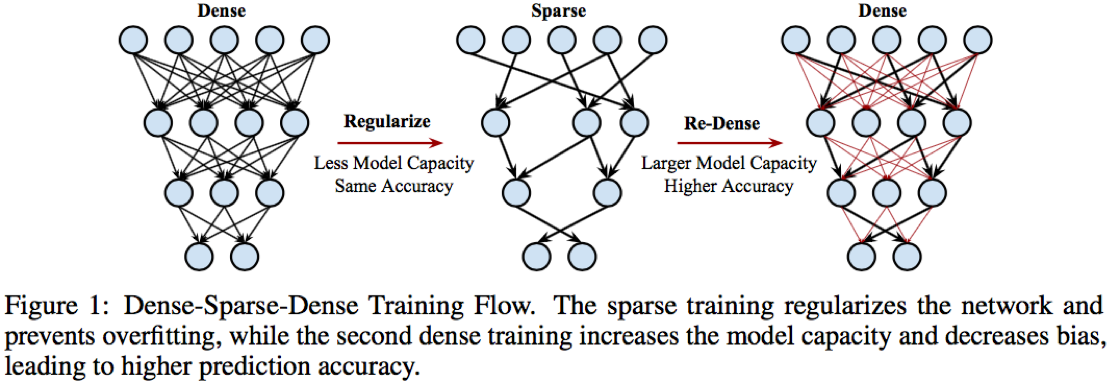

We propose DSD, a dense-sparse-dense training flow, for regularizing deep neural networks to achieve higher accuracy. DSD training can improve the prediction accuracy of a wide range of neural networks: CNN, RNN and LSTMs on the tasks of image classification, caption generation and speech recognition. DSD training flow produces the same model architecture and doesn't incur any inference time overhead.

Introduction

Simply reducing the model capacity would miss the relevant relations between features and target outputs, leading to under-fitting and a high bias.

We propose dense-sparse-dense training flow (DSD), a novel training method that first regularizes the model through sparsity-constrained optimization, and then increases the model capacity by recovering and retraining on pruned weights.

At test time, the final model produced by DSD training still has the same architecture and dimension as the original dense model, and DSD training doesn’t incur any inference overhead.

DSD Training Flow

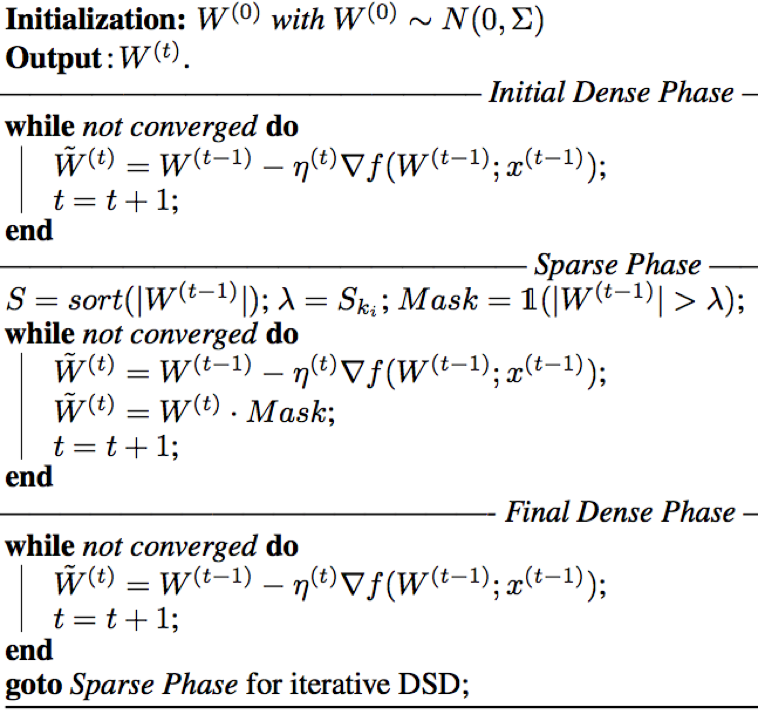

Initial Dense Training: learns the connectivity via normal network training on the dense network.

the goal is learning which connections are important.Sparse Training: prunes the low-weight connections and retrains the sparse network.

This truncation-based procedure has provable advantage in statistical accuracy in comparison with their non-truncated counterparts [5, 6, 7].

We use sensitivity-based analysis [8, 9] to find a separate threshold for each layer.

The S step adds the sparsity constraint as a strong regularization to prevent over-fitting.Final Dense Training: recovers the pruned connections, making the network dense again.

These previously-pruned connections are initialized to zero and retrained with 1/10 the original learning rate (since the sparse network is already at a good local minima).

Dropout ratios and weight decay remained unchanged.

Restoring the pruned connections increases the dimensionality of the network, and more parameters make it easier for the network to slide down the saddle point to arrive at a better local minima.

This step adds model capacity and lets the model have less bias.

Algorithm 1: Workflow of DSD training

Experiments

We applied DSD training to different kinds of neural networks on data-sets from different domains. We found that DSD training improved the accuracy for all these networks.

The neural networks are chosen from CNN, RNN and LSTMs;

The data sets are chosen from image classification, speech recognition, and caption generation.

AlexNet

BVLC AlexNet obtained from Caffe Model Zoo.

61 million parameters across 5 Conv layers and 3 fully connected layers.

pruned the network to be 89% sparse (11% non-zeros), the same as in previous work [8].

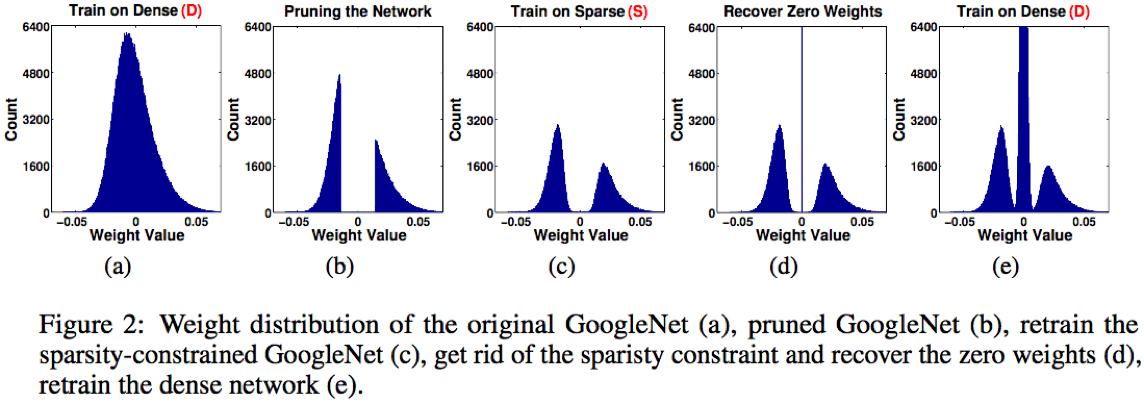

GoogleNet

BVLC GoogleNet model obtained from the Caffe Model Zoo.

13 million parameters and 57 Conv layers.

Following the sensitivity analysis methodology, we pruned the network to be 64% sparse.

VGGNet

VGG-16 model obtained from Caffe Model Zoo.

138 million parameters with 13 Conv layers and 3 fully-connected layers.

pruning more gently using sensitivity analysis to only 35% sparsity.

ResNet

pre-trained ResNet-50 and ResNet-152 provided by the original authors.

25 and 60 million parameters.

used sensitivity-based analysis to prune all layers (except BN layers) to an overall sparsity of 52%/55%.

performed a second DSD iteration on ResNet-50, pruning the network to be 52% sparse, decreasing the learning rate by another factor of 10x, and keeping other factors unchanged (dropout, batch normalization, L2 regularization).

SqueezeNet

the baseline model from the Caffe Model Zoo.

4.8MB, having 50x fewer parameters

prune away 67% of the parameters while improving accuracy.

NeuralTalk

The baseline NeuralTalk model we used is the flickr8k cnn lstm v1.p downloaded from NeuralTalk Model Zoo.

6.8 million parameters.

In the pruning step, we pruned all layers except Ws, the word embedding lookup table, to 80% of its original size.

DeepSpeech

The baseline DS1 model is trained for 50 epochs on WSJ training data.

Weights are pruned in the Fully Connected layers vand the Bidirectional Recurrent layer only. Each layer is pruned to achieve 50% sparsity.

DeepSpeech 2

approximately 67 million parameters which is around 8 times larger than the DS1 model.

The DS2 model is trained using Nesterov SGD for 20 epochs for each training step.

Related Work

Dropout and DropConnect

Model Compression

Sparsity-Constrained Optimization

Discussions

more timing-consuming than common training methods.

pruning the model before it converges. Experiments on AlexNet and DeepSpeech showed that we can reduce the training epochs by fusing the D and S training stages.

The most aggressive approach is to train a sparse network from scratch. We pruned half of the parameters of the BVLC AlexNet and initialized a network with the same sparsity pattern. In our two repeated experiments, one model diverged after 80k iterations of training and the other converged at a top-1 accuracy of 33.0% and a top-5 accuracy of 41.6%. Sparse from scratch seems to be difficult.

Another approach is to prune the network during training. We pruned AlexNet after training for 100k and 200k iterations before it converged at 400k.

Two pruning schemes are adopted: magnitude-based pruning (Keepmax-Cut) which we used throughout this paper, and random pruning (Random-Cut).

After pruning, the 100k-iteration model is trained with a learning rate of \(10^{−2}\) and the 200k-iteration one of \(10^{−3}\), both are optimal.

Keepmax-Cut@200K obtained 0.36% better top-5 accuracy.

Random-Cut, in contrast, greatly harmed the accuracy and caused the model to converge worse.

We conjecture that sparsity helps regularize the network but adds to the difficulty of convergence; therefore, the initial accuracy after pruning is of great importance.

In short, we have three observations:

early pruning by fusing the first D and S step together can make DSD training converge faster and better;

magnitude-based pruning learns the correct sparsity pattern better than random pruning;

sparsity from scratch leads to poor convergence.